It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is most adaptable to change.

Over the last decade, there has been remarkable progress in visual perception algorithms, driven by the development of layered differentiable models optimized in an end-to-end fashion. Despite these advancements, the deployment of such algorithms in open-world scenarios poses significant challenges. To effectively operate in open-world settings, these algorithms must incorporate capabilities for open-set recognition and open vocabulary learning, while also being robust to distribution shifts. Moreover, Open World Visual Understanding Systems (OWVUS) are expected to handle continuous streams of real-world data, adapting to non-stationary environments with minimal labelling requirements. This suggests the need for semi- or fully-automatic data engines that facilitate model learning alongside incremental data curation and labelling, potentially assisted with human intervention. The foundational pillars of building OWVUS lie in data and annotation efficiency, robustness, and generalization. These challenges can potentially be addressed through a unified framework known as Open-set Unsupervised Domain Adaptation (OUDA).

In general, visual signals can be decomposed into low- and high-frequency components. The former enables the unlocking of open-vocabulary capabilities in Vision-Language Models (VLMs) by establishing a well-aligned visual-semantic space. Conversely, the latter plagues the generalizability of deep neural networks on unseen or novel domains, as they may overfit to specific domain styles and therefore capture spurious correlations. OUDA serves as a nexus that connects a broad spectrum of research fields, including generative modelling , semi-supervised learning , meta-learning , open-set recognition , open-vocabulary learning , out-of-distribution detection , few-/zero-shot learning , contrastive learning , unsupervised representation learning , active learning , continual learning , and disentanglement of factors of variation . Its primary objective is to transfer knowledge from annotated source domains or pre-trained models (in the case of source-free UDA ) to unlabelled target domains. This transfer process requires addressing both covariate and semantic shifts, which correspond to variations in the high- and low-frequency components of visual signals, respectively. In essence, OUDA leverages methods developed within the aforementioned research areas to combat covariate or semantic shifts. On the other hand, addressing distribution shifts becomes unavoidable when extending any research problem from these areas to a more general and practical context.

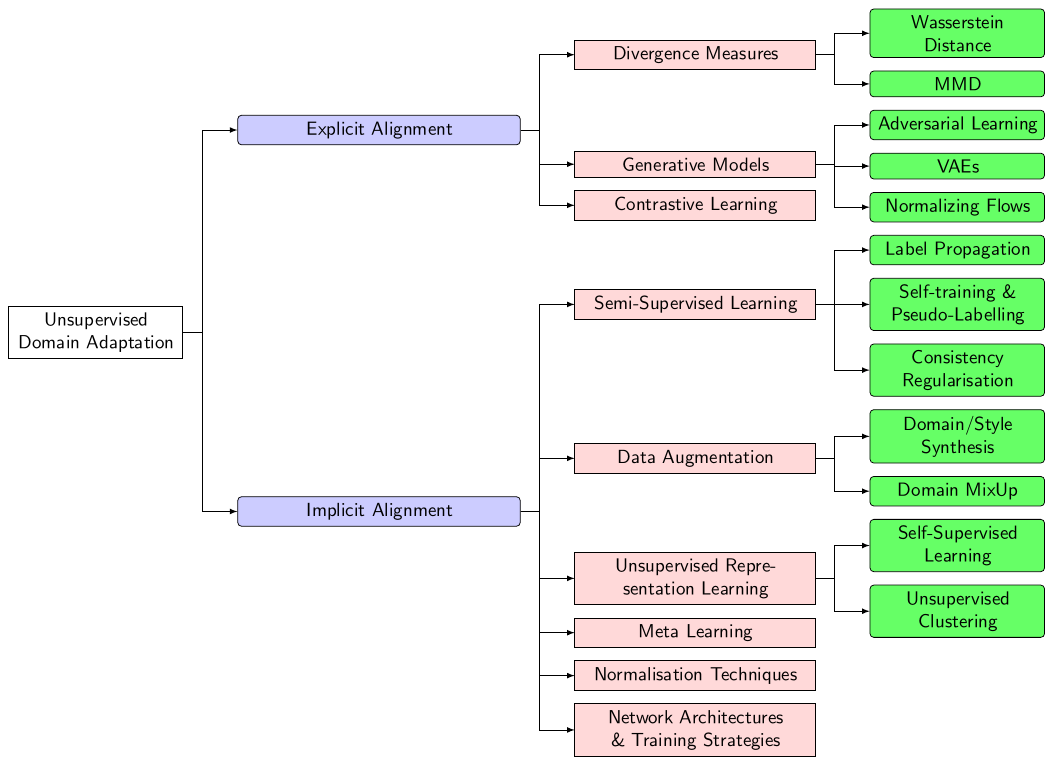

Fig-1 The taxonomy of UDA methods.

OUDA integrates open-set recognition/open-vocabulary learning and domain adaptation/generalization within a unified framework, aiming to address both high-level semantic shift and low-level covariate shift simultaneously and therefore presenting compounded challenges that stem from both research domains. Recent advancements have demonstrated rapid progress in constructing sophisticated open-vocabulary detectors or segmentors , facilitated by VLMs trained on web-scale image-text pairs which offer a comprehensive prior of the real-world. Central to this advancement is the endeavour to bridge the granularity gap between coarse-grained visual-semantic associations and fine-grained visual understanding objectives. Predominant solutions can fall into three main categories: (1) incorporating fine-grained awareness into pre-training recipes ; (2) transferring knowledge from VLMs to downstream fine-grained visual understanding tasks ; (3) the amalgamation of Vision Foundation Models (VFMs) by leveraging their complementary expertise resulting from distinct pretraining objectives . Besides, the pursuit of handling diverse vision tasks with a unified architecture and a single suite of weights in the open-set scenario has garnered increasing attention as a step towards constructing general-purpose vision foundation models.

In the emerging task of Open-World Object Detection (OWOD) , which combines open-set recognition with class incremental learning, the inherent open-vocabulary capability of VLMs offers convenience in identifying unknown classes. However, specialized components remain indispensable for the discovery of novel classes. Essentially, equipping a neural network with the ability to say NO when facing unfamiliar input, even with models like CLIP , presents significant challenges. Particularly in vision tasks, developing a class-agnostic object localizer capable of generalizing to novel classes remains an open question . This challenge proves critical for two-stage open-world detectors or segmentors, as the generation of high-quality proposals for unknown classes is pivotal. Recently, there is a promising trend where class-agnostic object discovery is tackled without requiring any manual annotation by leveraging pre-trained features of self-supervised Vision Transformers (ViTs) . However, these algorithms still struggle in complex scene-centric scenarios. OUDA introduces a more complicated pipeline compared to UDA with a close-set assumption, requiring the detection or rejection of unknown classes followed by cross-domain alignment with dominant solutions illustrated in Fig-1. It has been demonstrated that overlooking unknown classes during domain alignment can lead to negative transfer or even catastrophic misalignment. As far as I know, existing methods for open-set recognition or novel class discovery largely rely on heuristic approaches, such as one-vs-all classifiers , entropy-based separation , inter-class distance or margin-based methods , and leveraging zero-shot predictions from VLMs . Furthermore, effectively separating (target-)private samples into semantically coherent clusters , rather than treating them indiscriminately as a generic unknown class, presents an even more formidable challenge, requiring the utilization of intrinsic structures within unseen or novel classes.

Scaling up pre-training data with minimal human intervention has proven to be critical to foundation models, such as GLIP and SAM . Particularly in scenarios where manual annotation is resource-intensive , there’s a pressing need for an automatic data annotation framework. Such a framework should not only generate reliable pseudo labels but also continually expand the concept pool, thereby necessitating resilience to domain shifts stemming from heterogeneous data sources and open-set recognition capability. In the context of video action recognition, this task is referred to as Open-set Video Domain Adaptation (OUVDA) , which remains largely unexplored. This emerging research direction is inherently more complex due to the additional temporal dimension and the scarcity of large-scale and diverse datasets, presenting unique challenges that warrant further investigation. The closed learning loop, which involves the simultaneous evolution of model updating and dataset expansion, lays the groundwork for OWVUS capable of continual self-development over time. From a data-centric standpoint, the challenge revolves around constructing a dynamic dataset capable of consistently absorbing novel semantic categories and selecting relevant samples continually and actively with human-machine synergy. Continual learning , characterized by rapid adaptation to evolving data distributions and the potential encounter with unseen classes while avoiding catastrophic forgetting, can thus be integrated with OUDA to fulfil this objective.

To conclude, the prospect of unfolding possibilities and burgeoning potential in the field of OUDA and its synergies with other areas like continual learning and active learning fills me with anticipation and enthusiasm.

@misc{Cai2024OpenWorld,

author = {Xin Cai},

title = {Random Thoughts on Open World Vision Systems},

howpublished = {\url{https://totalvariation.github.io/blog/2024/open-world-vision-systems/}},

note = {Accessed: 2024-02-08},

year = {2024}

}